Group Description

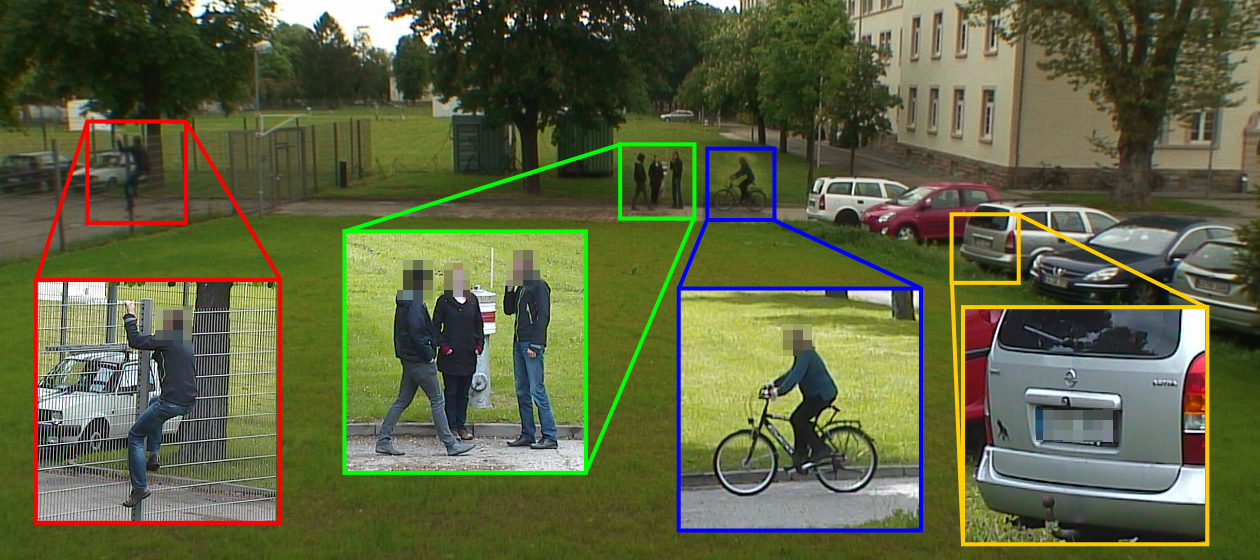

The interest in automated video analysis solutions has constantly increased throughout the past years. Video analysis methods applied under mainly controlled conditions, such as industrial environments, are nowadays an established technology. Despite great progress this field, the application auf video analysis techniques under uncontrolled conditions is still a widely unsolved problem. Major challenges mainly stem from the complexity and variability of unstructured outdoor environments. Additional challenges are e.g. objects showing a strong shape variation. Taking these challenges into account, we mainly focus on the following research questions:

-

Robustness. The focus

lies on the analysis of video data originating from multi-spectral

sensor platforms, which can freely move through unstructured

environments. This includes the detection and tracking of objects, as

well as the development of efficient preprocessing mechanisms capable

of supporting automated scene understanding.

-

Adaptivity. Video

analysis systems, applied in unknown environments must be able to adapt

themselves automatically to specific conditions up to a certain degree.

Adaptation can be done “offline” by applying statistical

approaches, as well as through methods that automatically learn from

the environment.

- Scalability. Current video analytic is mainly restricted to a limited number of object categories or events which are robustly detectable. In general there is a strong interest in methods showing advanced discriminative capabilities. Towards this end the question on how to scale up video analysis systems is of significant importance.

Fraunhofer Institute of Optronics, System Technologies and Image Exploitation IOSB

Fraunhofer Institute of Optronics, System Technologies and Image Exploitation IOSB