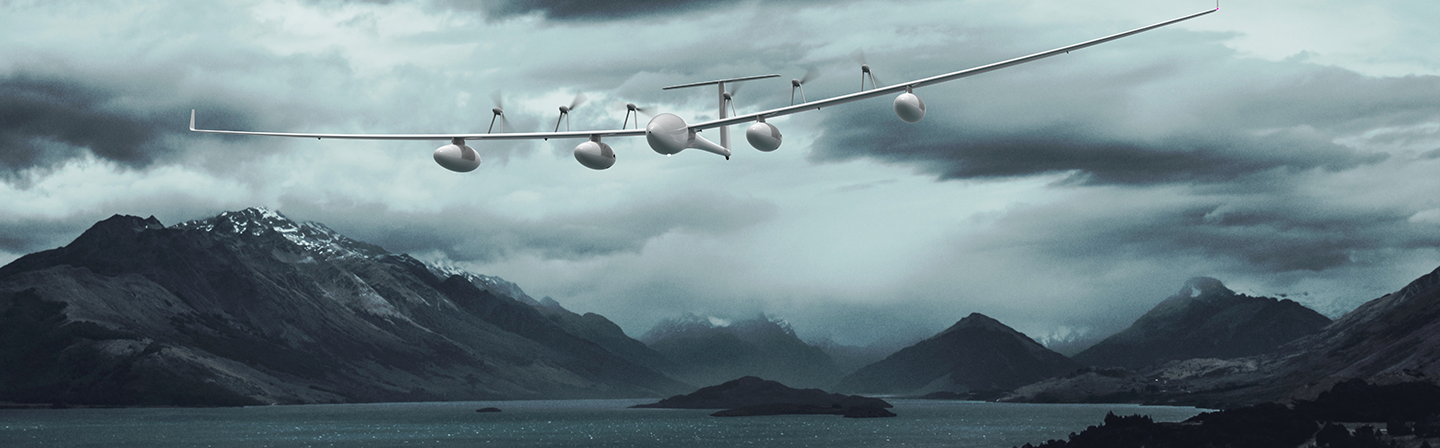

As part of the "BLUEASSIST" project, a concept for an autonomously flying, hybrid-electrically powered glider equipped with various sensors as a payload was developed in collaboration with industrial partners Lange Research Aircraft and M4COM.

Thanks to powerful fuel cells and the associated flight duration of up to 40 hours, such a remote sensing system would be suitable for monitoring large maritime areas, among other things. This includes monitoring shipping, detecting oil spills, but also other mostly sovereign tasks such as monitoring major emergencies such as forest fires and floods or border protection. Due to the extremely long flight duration in remote regions, special requirements are placed on data acquisition, processing, evaluation and communication with the UAV. In order to make optimum use of the remote sensing system and to avoid overloading the data transfer, an automated onboard processing and analysis of the data was designed. A complete and detailed evaluation of the data would then be possible in the associated ground station using more powerful and computationally intensive algorithms.

The main activities and objectives of the joint project were:

- In-depth exchange with potential users

- Conception of an overall system consisting of UAV, coordinated payload for data acquisition and transmission and attached ground station

- Development of a hybrid-electric Antares E2 powered by fuel cells

- Concept for the integration of a multi-sensor system, a data transmission unit and a ground control station

Participation of Fraunhofer IOSB - Department SZA

Multi-sensor data processing and evaluation (onboard + ground station)

Widely used camera systems for aerial surveillance are offered by companies such as Trakka Systems with the TC-300 model. The swivelling and stabilized design with integrated thermal and HDTV camera ensures a sufficiently high degree of flexibility with continuous zoom. In order to test the performance of this camera system in the field of ship detection from the air, artificial intelligence (Mask R-CNN networks pre-trained with MS COCO) was applied to a video section of the camera at Fraunhofer IOSB. Mask R-CNN are instance segmentation methods in which incoming images are processed by different networks (FPN, RPN) and features are segmented and classified. Due to its modularity and multiphase structure, Mask R-CNN is comparatively easy to train for new scenarios.

Fraunhofer IOSB and its SZA department were involved in the project:

- Investigation of methods for automated data (pre-)evaluation, filtering and compression onboard with regard to efficient data transmission

- Developing application-specific evaluation methods and designing the necessary hardware components for the ground station

Fraunhofer Institute of Optronics, System Technologies and Image Exploitation IOSB

Fraunhofer Institute of Optronics, System Technologies and Image Exploitation IOSB