Initial Situation - Multi-modal image data from a wide range of sensors:

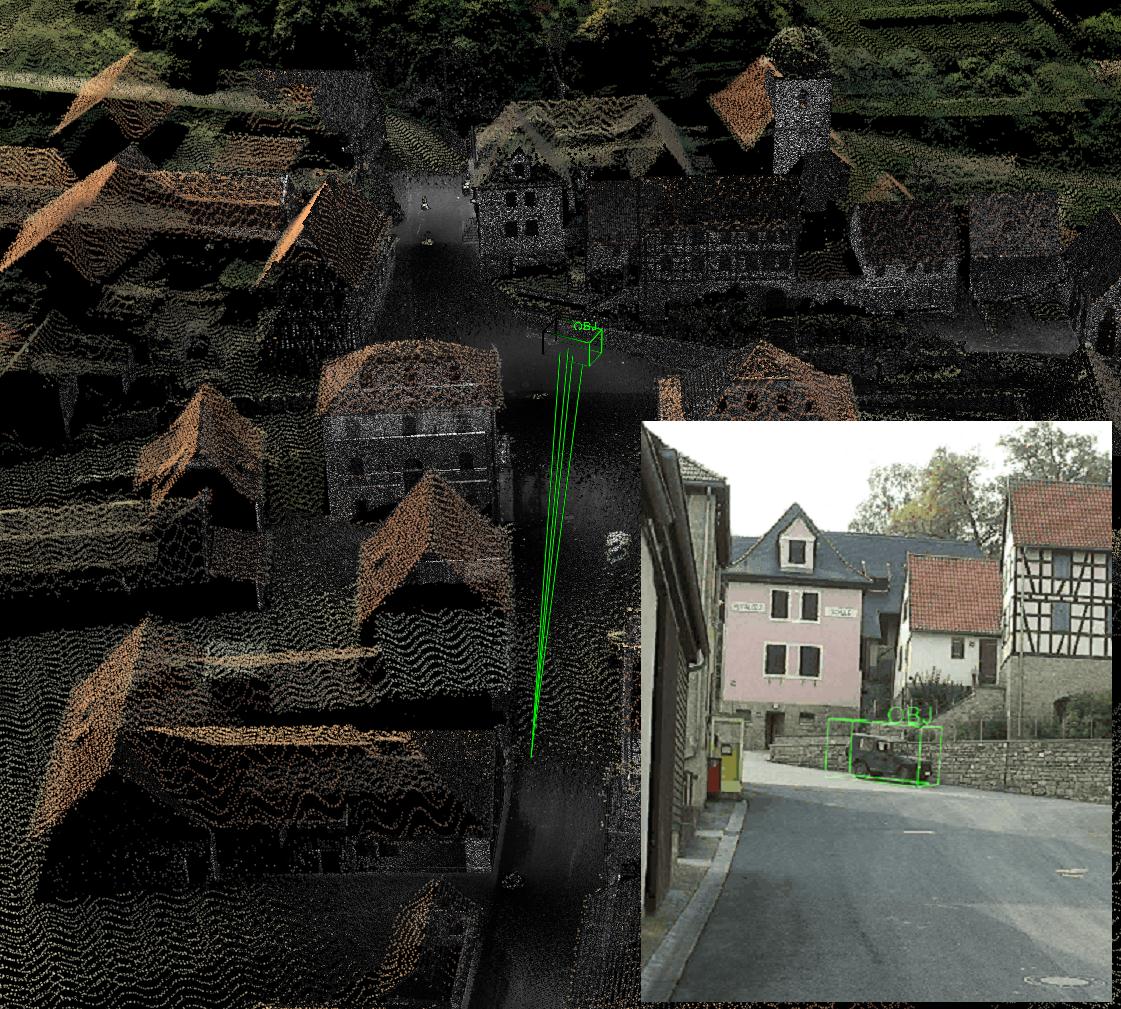

The possibilities for high-precision, three-dimensional surveying of the environment using imaging sensors continue to advance. For example, modern laser scanner systems permit rapid and highly exact, three-dimensional surveying of the environment. However, most systems of this type tend to be expensive and difficult to handle. A multi-modal image data fusion is required for the use of 3-D information mainly acquired in advance with conventional cameras. In the process, information on the exact parameters of the sensors used and their location with respect to the scene surveyed is determined. This therefore makes it possible to derive the 3-D structure of corresponding image content, thereby offering the possibility of direct marking and annotation of objects in the camera image.

Visualization of 3-D laser scans (consolidated data of terrestrial and airborne laser scans) including calculated object positions of objects defined in the photograph.

Task

The project aims to develop generic methods for three dimensional object localization capable of operating with conventional image information within the visible spectrum as well as with image data from other spectral ranges and referring to previously recorded 3-D data. Fields of applications range from precise determination of camera positions and orientations and the objects rendered to the improved 3-D tracking methods and augmented reality applications.

Result

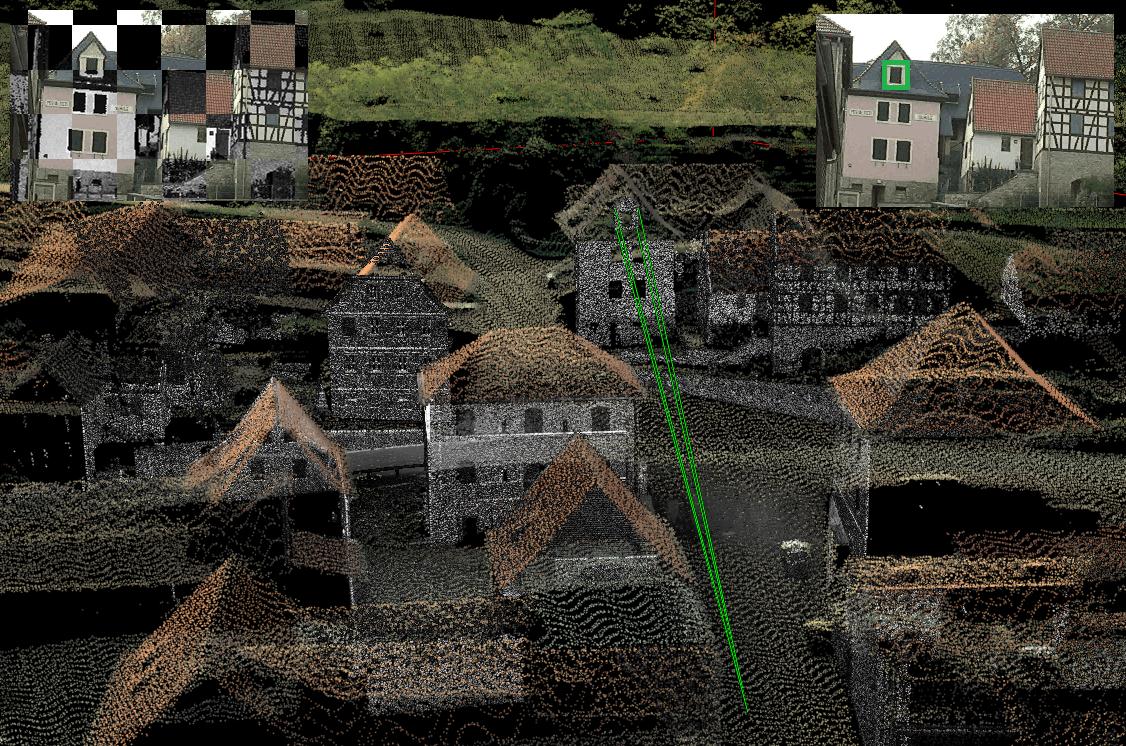

Highly-exact registration methods for image data within the visible and infrared spectral range were developed in the project, which can be implemented with terrestrial and airborne 3-D laser scanning data.

Project Description

An important basis for the fusion algorithms developed are new methods for multi-modal determination of correspondence and intensity-based image registration. An important aspect of the methods is the possibility for direct use of 3D laser scans. This way 3-D data acquired in real time can be considered directly for referencing without complicated pre-processing, which is often prone to error. All applications have the common goal of fully-automated fusion of all data records involved. The methods therefore make do without any additional sensors and also are capable of being used on mobile devices.

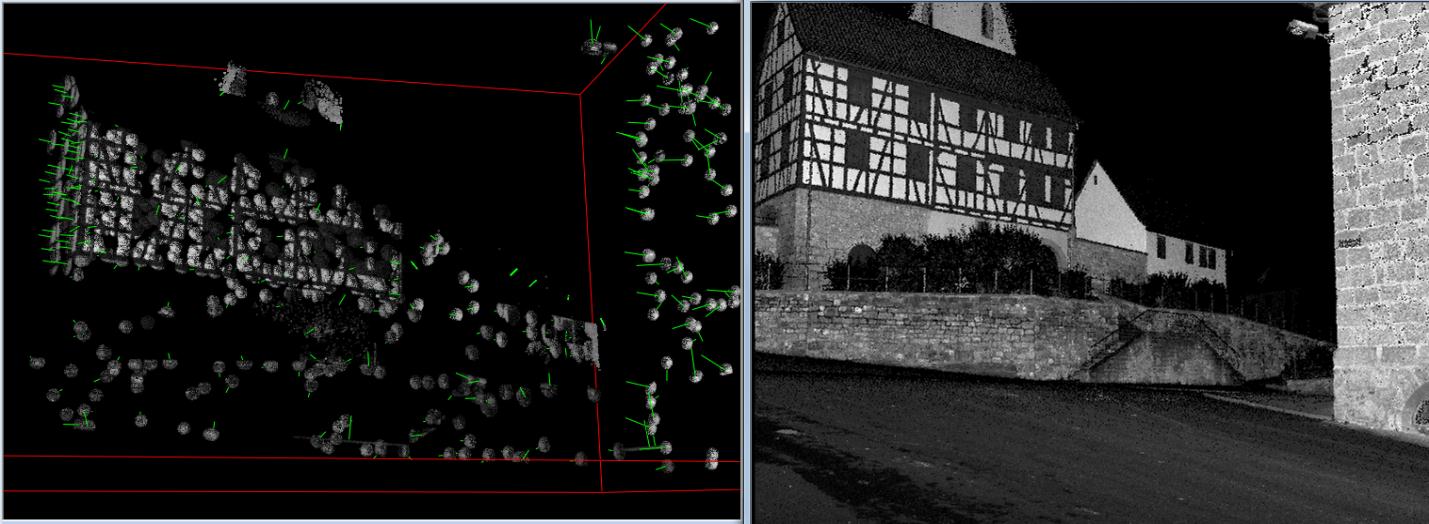

Extracted 3-D regions (left) of a terrestrial laser scan (right) with a high level of informational content for recognition. They form the basis for the creation of feature maps for 2-D/3-D registration.

Compact representation of 3-D data permitting efficient searching is crucial for larger environment models such as laser scans of larger areas or entire cities. By means of generating virtual camera views and extracting visually distinct aspects in the corresponding intensity data of the 3-D data (e.g. laser reflectance values), environment models are prepared for 2-D recognition. Hierarchically grouped feature maps with fast search structures are generated in the process, which permit rapid determination of correspondence.

Due to often fundamentally different object appearances in multi-modal data records, robust correspondence determination algorithms are used, which also include correct geometric allocation of corresponding features. High registration accuracy can be achieved to meet highest demands in terms of accuracy by means of downstream, intensity-based distance minimization between simulated views and query images. In addition to conventional, statistical distance measurements, newly developed methods are also used based on self-similarities, which exhibit a high level of robustness against local intensity fluctuations. A large number of conceivable applications are already plausible based on the methods developed to date for multi-modal image data fusion.

Top right: visualization of 3-D laser scans (consolidated data of terrestrial and airborne laser scans) including viewing rays of the window defined in the photograph. Top left: multi-modal image registration techniques enable object referencing with respect to 3D-laser scans.

Using the underlying 3-D data this enables a combined analysis of sensors which do not exhibit any direct correspondence between the respective image data. The laser scans can also be used as a virtual calibration object for improved derivation of current 3-D information from sensors in motion. Thus the use of the 3-D data permits a more robust estimation of proper motion without the need for additional sensors, which results in very inexpensive sensor systems and compact designs.

References

- Bodensteiner, C., Hebel, M., Arens, M.: Accurate Single Image Multi-Modal Camera Pose Estimation. ECCV – RMLE, 2010.

- Bodensteiner, C., Huebner, W., Juengling, K., Mueller, J., Arens, M.: Local Multi-Modal Image Matching Based on Self-Similarity, IEEE - ICIP, 2010.

- Bodensteiner C.: A Situation Awareness and Information Handover System for Small Unit Operations, Trilateral-Workshop (SE-NL-DE) Team Situation Awareness in Small Unit Operations, Stockholm, 2011.

- Bodensteiner C., Hübner W., Jüngling K., Solbrig P., Arens M.: Monocular Camera Trajectory Optimization using LiDAR Data, Computer Vision in Vehicle Technology (CVVT), ICCV, Barcelona, 2011.

Fraunhofer Institute of Optronics, System Technologies and Image Exploitation IOSB

Fraunhofer Institute of Optronics, System Technologies and Image Exploitation IOSB