Trajectory Prediction Benchmark

Existing benchmark datasets targeting the overall performance of trajectory prediction models lack the possibility of gaining insight into a model’s behavior under specific conditions. Towards this end, a new benchmark dataset aiming to take on a complementary role compared to existing benchmarks is proposed. It consists of synthetically generated and modified real-world trajectories from established datasets with scenario-dependent test and training splits. The benchmark dataset provides a hierarchy of three inference tasks, representation learning, de-noising, and prediction, comprised of several test cases targeting specific aspects of a given machine learning model. This allows a differentiated evaluation of the model’s behavior and generalization capabilities. As a result, a sanity check for single trajectory models is provided aiming to prevent failure cases and highlighting requirements for improving modeling capabilities.

The download link and evaluation instructions will be available on GitHub.

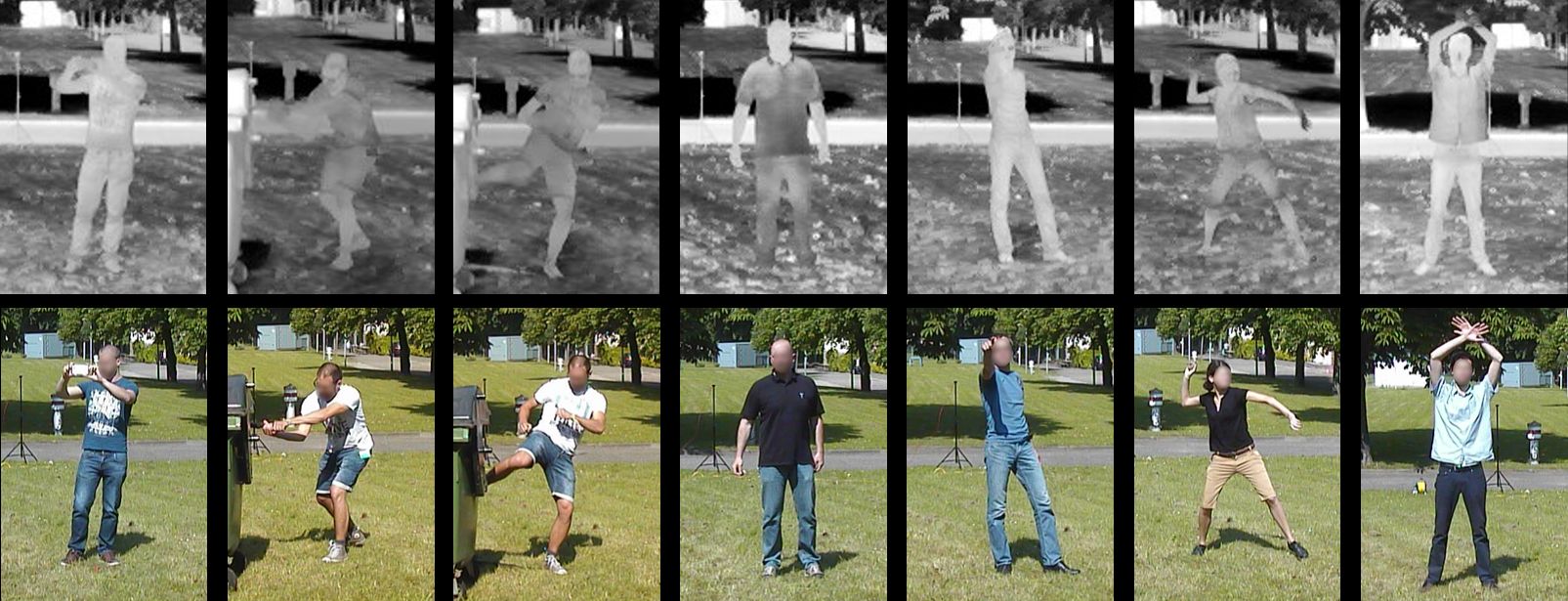

Multispectral Action Dataset

The IOSB Multispectral Action Dataset is an outdoor database containing different violent and non-violent actions recorded at a sunny summer day from two different views from IR and TV cameras respectively.

For the infrared spectrum two AXIS Q1922 cameras with a spectral range of 8−14 µm (longwave IR) and 10 mm focal length for the 0° camera, and 35 mm for the 90° camera, were used. For the visible spectrum, an AXIS Q5534 and an AXIS Q1755 camera were used for the 0° and 90° view respectively.

The post-processed images have a resolution of 800×600 px for the TV and 640×480 px for the IR data with a frame rate of 25 fps. The dataset contains ten persons (8 male, 2 female in the age of 31.2±5.7) performing six different actions, namely, filming with a smartphone (film), hitting with a stick on an object (hit), kicking an object (kick), pointing a finger at something (point), throwing an object (throw), waving with both arms (wave). Furthermore, a rejection class (other) was included where the persons are standing without performing any specific action.

The download link and password will be provided after sending a request to ann-kristin.grosselfinger@iosb.fraunhofer.de.

Please acknowledge the source of the dataset in all publications in which it is used as by referencing the following paper:

Fraunhofer Institute of Optronics, System Technologies and Image Exploitation IOSB

Fraunhofer Institute of Optronics, System Technologies and Image Exploitation IOSB